Tanner Schmidt

I am a computer vision researcher interested in real-time vision, unsupervised visual representation learning, geometry-based computer vision, and vision for robotics. Currently I'm working as a Research Scientist at Meta Reality Labs.

First-Author Publications:

Segment This Thing: Foveated Tokenization for Efficient Point-Prompted Segmentation

Tanner Schmidt, Richard NewcombeCVPR 2025

Project | Paper | Code | Video

A modification of the Segment Anything Model (SAM), increasing efficiency through foveated tokenization.

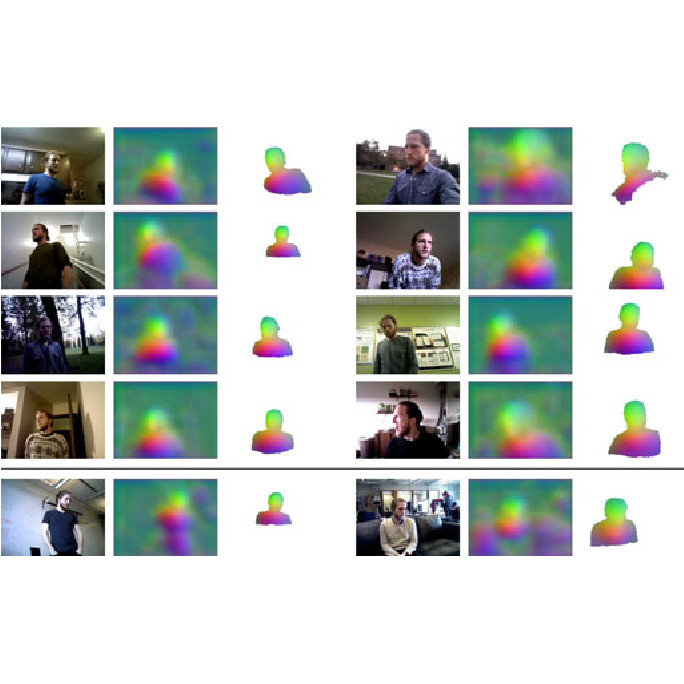

Self-Supervised Visual Descriptor Learning for Dense Correspondence

Tanner Schmidt, Richard Newcombe, Dieter FoxIEEE Robotics and Automation Letters 2 (2016)

Dense visual descriptors are training using a contrastive loss and similarity labels provided by a 3D reconstruction system.

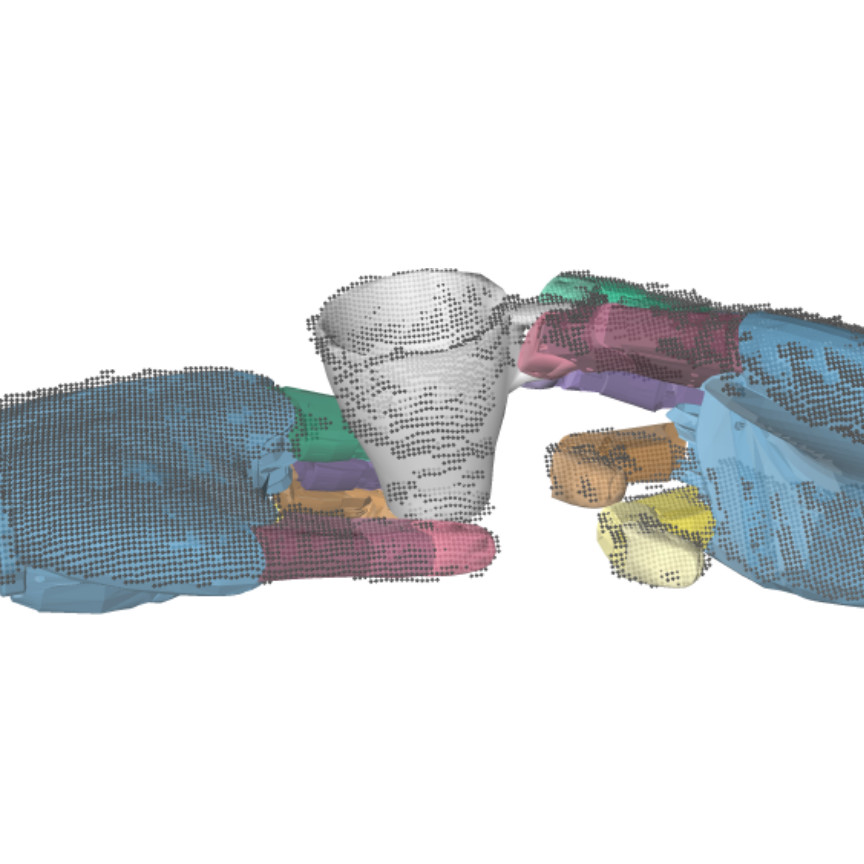

Depth-based Tracking with Physical Constraints for Robot Manipulation

Tanner Schmidt, Katharina Hertkorn, Richard Newcombe, Zoltan Marton, Michael Suppa, Dieter FoxICRA 2015

A depth-based tracker provides real-time estimates of the pose of robot hands and target objects for tele-operated grasping.

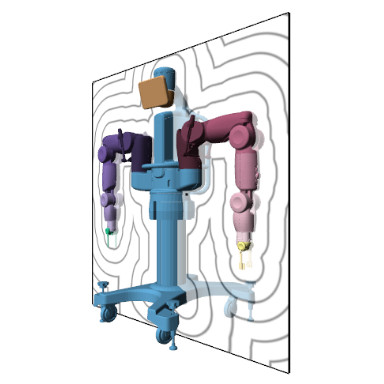

DART: Dense Articulated Real-Time Tracking

Tanner Schmidt, Richard Newcombe, Dieter FoxRSS 2014

A CUDA-accelerated model-based tracker using a signed distance function representation of target objects.

Datasets:

YCB-Video

A dataset of RGB-D videos of static configurations of YCB objects. Annotations of object poses are provided.

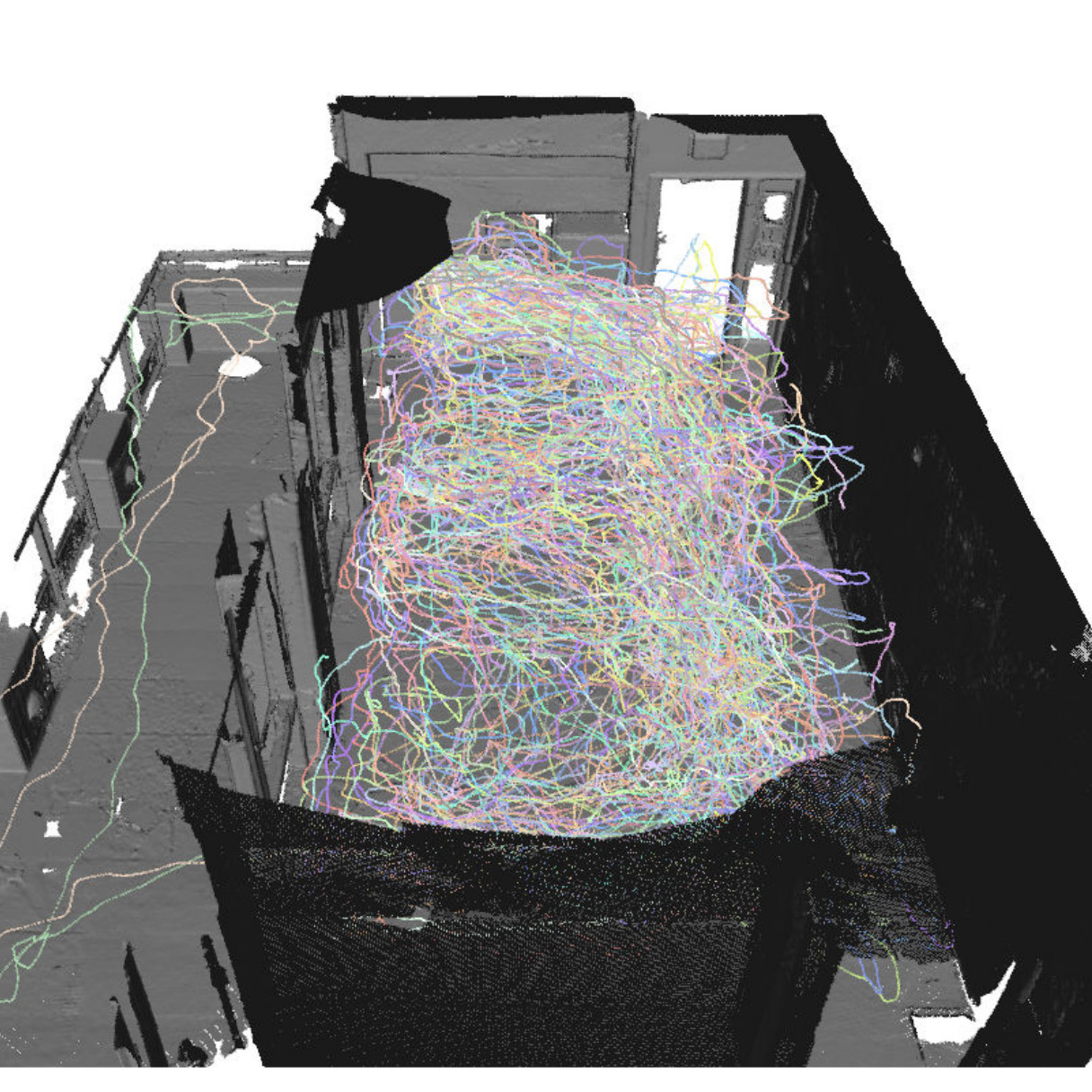

Jaech Gallery Dataset

A dataset of RGB-D videos capturing the same scene in a variety of lighting conditions and furniture configurations.